Building a Data Democratization Platform for an Energy Utility Provider

A major player in the electricity and utilities sector faced significant challenges in enabling visibility and accessibility to its IoT and operational data. While large volumes of data were being collected from energy meters, IoT sensors, and operational systems, the absence of a centralized, user-friendly data platform made it difficult for business users to independently access actionable insights. Data silos, unclear KPI definitions, and missing CI/CD processes further hindered innovation and slowed down data-driven decision-making. The key challenge was to build an end-to-end data platform from scratch, capable of handling both streaming and batch data, transforming it into meaningful KPIs, and enabling consistent, scalable reporting across teams.

10/3/20233 min read

Sector: Electricity/power generation/ utility secotror

Duration: July 2022 - Jan 2023

Work Delivered:

End-to-End Data Platform using Databricks:

Designed and implemented a comprehensive data platform using Databricks on AWS, covering ingestion, processing, orchestration, and reporting.

Developed structured workflows for both batch and real-time (streaming) ingestion of IoT and operational data.

Data Integration and Transformation:

Built pipelines to process raw data into bronze, silver, and gold layers.

Created stored procedures in SQL and PySpark to transform and aggregate data according to business requirements.

Designed scalable, business-driven data models in Snowflake’s gold layer aligned with key performance indicators (KPIs).

Infrastructure and CI/CD Setup:

Deployed and configured Databricks infrastructure on AWS with best practices for security, scalability, and cost-efficiency.

Implemented a complete CI/CD process for Databricks notebooks and Snowflake infrastructure, ensuring streamlined deployments.

Introduced multithreading in PySpark workflows and optimized SQL queries to improve processing speed and reduce cloud costs.

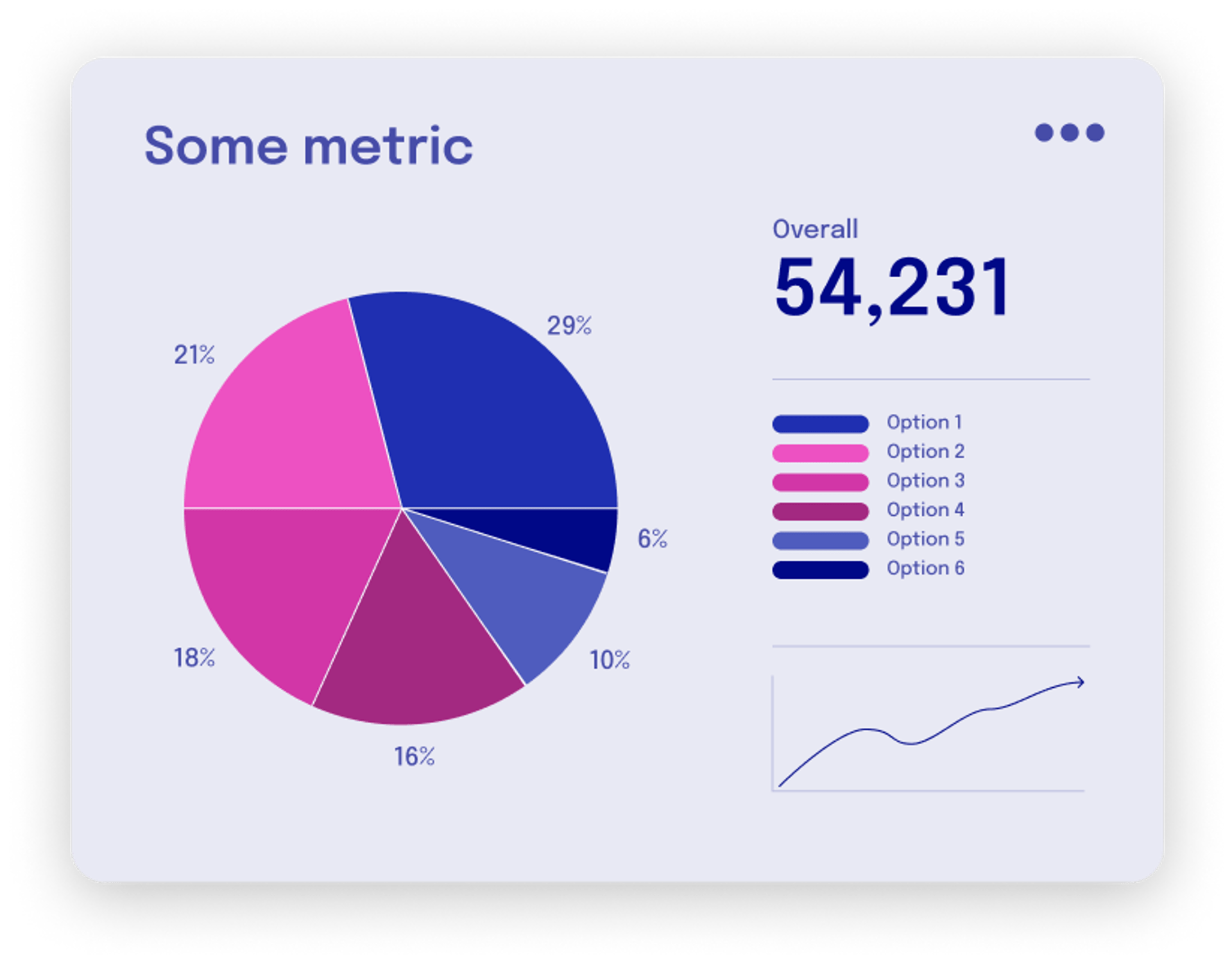

BI Dashboards and Data Democratization:

Integrated the gold layer with BI tools to allow non-technical users easy access to KPIs and operational insights.

Provided self-service capabilities to the business teams to drive data-informed decision-making across departments.

Pressure Points / Challenges:

Unclear Business Requirements:

KPI definitions were not fully developed at the beginning of the project, requiring a flexible and iterative approach to data modeling.

Domain Knowledge Gap:

Initial unfamiliarity with the energy sector’s operational metrics and IoT data structures added complexity to the requirements gathering phase.

Lack of Established CI/CD and Orchestration:

No existing processes for automating deployments of Databricks workflows or Snowflake infrastructure.

Existing queries and data processing tasks were inefficient, leading to high memory usage and cloud costs.

Program/Project Overview:

Scope:

Part of a broader enterprise initiative to consolidate IoT and operational data into a single platform for anomaly detection, cost optimization, and enhanced decision-making.

Collaboration:

Worked in close collaboration with data engineers, analysts, product managers, and business stakeholders to align technical implementation with business needs.

Conducted multiple feedback loops and iterative prototyping to refine the KPIs and reporting models.

Problems and Pains (Pre-Project):

Limited Data Visibility: Business teams had limited or no access to real-time or historical operational data.

Disparate Systems: Fragmented reporting systems resulted in inconsistent and delayed insights.

Operational Inefficiencies: Manual deployments and lack of orchestration processes led to slow development cycles.

High Cloud Costs: Poorly optimized queries and inefficient processing increased operational expenses.

Quantified Impact of Pre-Project Pain Points:

Cost Inefficiency: Inefficient query processing led to significant overuse of compute credits.

Delayed Decision-Making: Manual reporting processes delayed operational responses and business decisions.

Limited Predictive Capabilities: Without structured historical data, proactive anomaly detection was not possible.

Promises:

Data-Driven Decision-Making: Empower business teams with timely, data-backed insights.

Full CI/CD Automation: Implemented continuous integration and deployment processes for both Databricks and Snowflake platforms.

Performance Optimization: Achieved faster data processing through query optimization and parallel processing techniques.

KPI-Based Dashboards: Delivered BI dashboards directly connected to Snowflake gold layer, improving operational visibility and energy consumption monitoring.

Problems and Pains (In-Project):

Complex Data Modeling: Designing flexible yet robust data models was challenging due to evolving business needs.

Mapping Business Needs to Technical Design: Bridging the gap between non-technical business requirements and technical specifications required several iterations.

Solution:

Conducted an initial prototype using sample datasets and iteratively refined the solution based on business feedback, ensuring requirements were accurately captured and implemented.

Payoffs:

For the Organization:

Cost Savings: Significant reduction in cloud credit usage through performance optimization.

Improved Decision-Making: Centralized, real-time data access enabled faster and more informed operational decisions.

Operational Efficiency: Automated CI/CD pipelines and orchestrated workflows led to smoother and faster deployment cycles.

Predictive Insights: Early detection of anomalies helped reduce downtime and improve energy delivery efficiency.

Contact us

Whether you have a request, a query, or want to work with us, use the form below to get in touch with our team.